Routing respondents directly from one survey to the next can lead to unreliable results. Do your sample providers route respondents from survey to survey?

When deciding on a sample provider, one question to ask is whether the company routes their respondents directly from one survey to another. All survey panels must find a balance between providing enough opportunities to take surveys to keep their respondents engaged but not sending survey invitations so frequently that individuals start to drop out or provide low quality data. One method that some vendors use to maximize the number of surveys taken is to route respondents from one survey directly to another. For example, if you start a survey but do not meet screener criteria many companies will redirect you to another survey that you may be eligible for instead of ending your participation at that first survey. Other times, you will be directed from one survey to the next without realizing that the questions you are answering come from separate instruments.

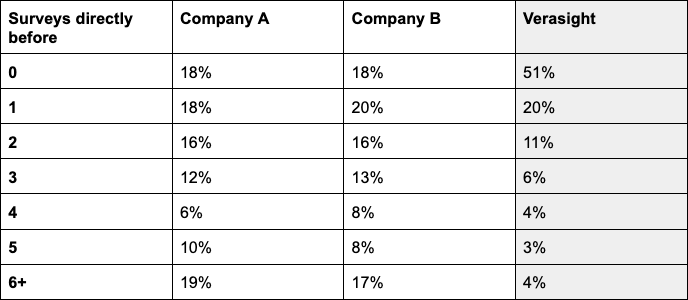

We recently examined experiences across Verasight respondents and respondents from 2 well-respected sample providers: Company A and Company B. We asked respondents how many surveys they took directly before beginning their current survey. We found that both Company A and Company B respondents reported taking many more surveys consecutively. Nearly 20% of Company A and Company B respondents took 6 or more surveys directly before their current survey, compared to just 4% of Verasight respondents.

Compared to 2 large sample providers, Verasight respondents take the fewest consecutive surveys.

Does it matter if respondents are routed into your survey from a previous survey?

For some projects, respondents being routed directly from a prior survey is likely not a concern. For example, if your survey asks questions in which responses are unlikely to be influenced by thoughts or considerations that a previous survey may have primed, you may not feel concerned about lingering influences of previous survey topics. Similarly, if respondent fatigue from taking many consecutive surveys is not a concern, many sample providers may be equally good choices for your project. If, by contrast, your survey asks about topics that are more likely to be influenced by a respondent’s mindset, emotional states, or level of fatigue, it is important to consider potential effects of your respondents taking back-to-back surveys due to routing. In either case, we recommend asking your sample provider about their routing policies and asking that respondents who were routed to your survey from another survey be identified.

Verasight Never Uses Routing

While there are select times that panelists can choose to take multiple surveys in one day, Verasight never routes respondents directly from one survey to another. Verasight panel members may access surveys on our platform at any time, and are emailed with a notification when a new survey is available on their dashboard. When Verasight panel members take multiple surveys in a day, it is their conscious choice to do so. When a respondent completes a survey, they are not automatically taken back to their dashboard of available surveys. Instead, they are shown their point totals and other account information. In order to take another survey, respondents must navigate out of their rewards window and back to their dashboard, building in a pause between surveys to allow respondents to switch their mental focus before beginning a new survey, if they choose to do so. Finally, though it is possible for respondents to take multiple surveys in a short time period, our results show that few respondents do. More than half (51%) of Verasight respondents took 0 surveys prior to their current survey, and only 4% took 6+ surveys directly before beginning their current survey.

We want our clients to feel confident that their data are influenced by the questions posed to respondents, not the surveys that respondents took immediately prior! Curious if Verasight is the right fit for you? Reach out today to learn more.

Survey Details:

Verasight:

The Benchmarking Survey A was conducted by Verasight from January 30 - February 23, 2023. The sample size is 1,000 respondents. All respondents were recruited from an existing Verasight panel, composed of individuals recruited via both address-based probability sampling and online advertisements.

The data are weighted to match the Current Population Survey on age, race/ethnicity, sex, income, education, region, and metropolitan status, as well as to population benchmarks of partisanship and 2020 presidential vote. The margin of error, which incorporates the design effect due to weighting, is +/- 3.1%.

To ensure data quality, the Verasight data team implemented a number of quality assurance procedures. This process included screening out responses corresponding to foreign IP addresses, potential duplicate respondents, potential non-human responses, and respondents failing attention and straight-lining checks.

Company A:

The Benchmarking Survey B was conducted by Company A from January 20 - January 26, 2023. The sample size is 1,002 respondents. Respondents were recruited from a variety of vendors sampled through the Company A survey sample marketplace.

The data are weighted to match the Current Population Survey on age, race/ethnicity, sex, income, education, region, and metropolitan status, as well as to population benchmarks of partisanship and 2020 presidential vote. The margin of error, which incorporates the design effect due to weighting, is +/- 3.4%.

Company B:

The Benchmarking Survey C was conducted by Verasight from February 9 - February 23, 2023. The sample size is 1,014 respondents. Respondents were recruited from a variety of vendors sampled through the Company B survey sample marketplace.

The data are weighted to match the Current Population Survey on age, race/ethnicity, sex, income, education, region, and metropolitan status, as well as to population benchmarks of partisanship and 2020 presidential vote. The margin of error, which incorporates the design effect due to weighting, is +/- 3.3%.

To ensure data quality, Company B implemented a number of quality assurance procedures. This process included screening out responses corresponding to respondents who failed straight-lining checks, gave nonsensical answers on open-ended questions, and showed patterned responses.

.png)